Sedimentary Facies Machine Learning - XGBoost with Intelligent Bayesian Optimization

Let the Computer name the Formation

- Introduction

- Let’s get going!

- Step 1: Load

- Step 2: Explore

- Step 3: Augmentation

- 4. Train, then Predict Test Facies

- Step 5: Evaluate

- Conclusion

- Acknowledgements:

- References

Introduction

Structured data classification is one of the important and typical task in supervised machine learning (ML). Assigning categories to structured data, which can be anything stored in a spereadsheet, relational database content (SQL), csv file etc. Data conetents may be names, dates, addresses, credit card numbers, stock information, geolocation, etc. In this article, I would like to demonstrate how we can do data classification on geophysical data using Python, XGBoost, and a little bit of Scikit-Optimize to predict sedimentological facies.

The Society of Exploration Geophysicists (SEG), Matt Hall (Agile), and Brendon Hall (Enthought) ran in 2016 a competition on who can create the best Machine Learning model in classifying sedimentary facies in several wells using wireline measurements. For the dataset provided, a subject-matter expert (e.g., sedimentologist) manually described core sections and gave the facies names, which is calles labeling. This complete dataset can be used to train a Machine Learning Model. Note that in Artificial Intelligence rules are not hard-coded as in traditional programming, rather we give the computer the inputs and outputs, and the let it figure out the rules. These rules can then be used to make predictions on new, unseen, data. In this case we can then use new wireline data to predict sedimentological facies.

The dataset, along with instructions were made available online in an article in SEG The Leading Edge “Facies classification using machine learning”. The article included a link to some starter code in a Jupyter notebook with a simple Support Vector Machine model that had to be surpassed in accuracy.

In 2017, the results of the SEG competition were published in the SEG Leading Edge journal including all submission files for contestants to learn from each other. Teams were allowed to send new and improved models until the deadline. Two years later Brandon Hall penned a brief lookback on the merits, aftermath, and innovations that have been sparked by the ML contest he co-organized.

The goal of this discussion is working through what a good submission did to improve on the starter code, but does not necessarily have to be the winning submission. Since the ML contest took place the XGBoost ensemble learning algorithm has taken on larger popularity. However, it not that easy to use, since there are many, many hyperparameter to tune. For that reason I decided to utilize Scikit Optimize, which was released first in August 2016, and I would like to see what benefits it has over Grid Search or Random Search, which was commonly used at the time of the ML contest.

What does the data set look like? Originally, the SEG data were provided by the University of Kansas on the Hugoton and Panoma gas fields in SW Kansas ( Tim Carr and Robert S. Sawin (1996) Hugoton Natural Gas Area of Kansas, Kansas Geological Survey, Public Information Circular (PIC) 5). Encountered facies are interbedded deposits of carbonate (limestone and dolomite) and shale. The train dataset contains over 4000 training samples from ten different wells that were measured at half-foot downhole distances. These samples contain seven features (predictor variables). The seven features are: gamma ray (GR), resistivity (ILD_log10), photoelectric effect (PE), density/neutron porosity difference (DeltaPHI), average density/neutron porosity (PHIND), nonmarine/marine indicator (NM_M), and relative position (RELPOS). The test set was labeled by geologists with the correct facies information. Additionally, a test set of 800 samples was provided containing two wells but without facies labels. Goal is to train a classifier on the training set and predict correct facies on the test set. However, the true test labels remain unknown to the contestants. The published test-set F1 scores were calculated by the organizers. The best submitted classifier had an F1 score of 0.641 on the test set! This is something we can work with and strive to beat.

| Facies | Description | Label | Adjacent facies |

|---|---|---|---|

| 1 | Nonmarine sandstone | SS | 2 |

| 2 | Nonmarine coarse siltstone | CSiS | 1,3 |

| 3 | Nonmarine fine siltstone | FSiS | 2 |

| 4 | Marine siltstone and shale | SiSh | 5 |

| 5 | Mudstone | MS | 4,6 |

| 6 | Wackestone | WS | 5,7,8 |

| 7 | Dolomite | D | 6,8 |

| 8 | Packstone-grainstone | PS | 6,7,9 |

| 9 | Phylloid-algal bafflestone | BS | 7,8 |

| 11 | Marine sandstone+ | N/A | 4,6,8 |

+Only present in test set, cannot be trained.

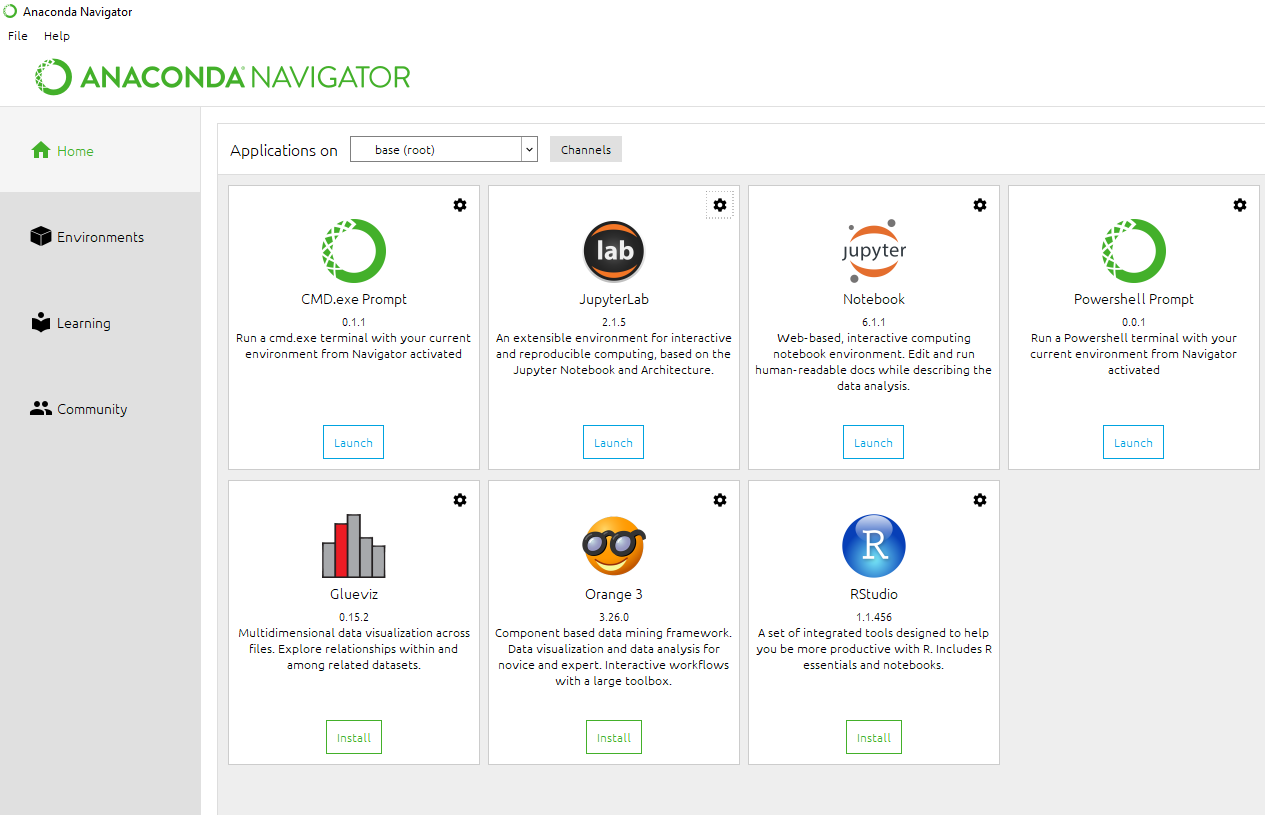

Prerequisites to follow this example are Python version 3 and Jupyter notebook. You can just install Anaconda and it will get everything for you. Also, little bit of Python and ML basics including data classification is required. We will be using XGBoost and Scikit-learn (Python) libraries for our example.

Having installed Anaconda, you open the Anaconda Navigator and land on the Home screen. There, click the Install button for JupyterLab to make it accessible in future. Next, update all the libraries includied. Click on the Anaconda home screen on “CMD.exe Prompt” and enter conda update --all --y. This will online-update all the pre-installed libraries. But you will need more for this project that will install in the latest version.

Downloads:

The classification problem can be divided into the following steps:

- Load

- Explore

- Augmentation

- Train, then Predict Test Facies

- Evaluate

Let’s get going!

Open Jupyter Lab (see screenshot above) and navigate in the file system pane on the left to the folder of the downloaded notebok Import necessary libraries. If you never worked with these then you may have to install them first with !conda install matplotlib for example.

%matplotlib inline

import seaborn as sns

import matplotlib.pyplot as plt

import matplotlib as mpl

import numpy as np

import pandas as pd

from sklearn.impute import SimpleImputer

from sklearn import preprocessing

from xgboost.sklearn import XGBClassifier

from skopt import BayesSearchCV

from skopt.space import Real, Categorical, Integer

from scipy.signal import medfilt

from sklearn.metrics import f1_score

from classification_utilities import make_facies_log_plot

from classification_utilities import compare_facies_plot

Step 1: Load

First, read the training data from csv to a Pandas dataframe, define the logging parameter names, facies names, and some standardized facies colors. At the end of this cell, impute all missing values (especially in the “PE” parameter) with the average of the parameter.

data = pd.read_csv('facies_vectors.csv')

# Define groups of parameters

# Wireline measurements -> lnput features

feature_names = ['GR', 'ILD_log10', 'DeltaPHI', 'PHIND', 'PE', 'NM_M', 'RELPOS']

# Geological descriptins -> labels

facies_names = ['SS', 'CSiS', 'FSiS', 'SiSh', 'MS', 'WS', 'D', 'PS', 'BS']

# Color codes

facies_colors = ['#F4D03F', '#F5B041','#DC7633','#6E2C00', '#1B4F72','#2E86C1', '#AED6F1', '#A569BD', '#196F3D']

# Store features and labels

X = data[feature_names].values

y = data['Facies'].values

# Store well labels and depths

well = data['Well Name'].values

depth = data['Depth'].values

# Fill 'PE' missing values with mean

imp = SimpleImputer(missing_values=np.nan, copy=False, strategy="mean")

imp.fit(X)

X = imp.transform(X)

Step 2: Explore

Data Exploration: Feature distribution

Each parameter compared with each other. Colors pertain to facies type.

def plot_feature_stats(X, y, feature_names, facies_colors, facies_names):

# Remove NaN

nan_idx = np.any(np.isnan(X), axis=1)

X = X[np.logical_not(nan_idx), :]

y = y[np.logical_not(nan_idx)]

# Merge features and labels into a single DataFrame

features = pd.DataFrame(X, columns=feature_names)

labels = pd.DataFrame(y, columns=['Facies'])

for f_idx, facies in enumerate(facies_names):

labels[labels[:] == f_idx] = facies

data = pd.concat((labels, features), axis=1)

# Plot features statistics

facies_color_map = {}

for ind, label in enumerate(facies_names):

facies_color_map[label] = facies_colors[ind]

sns.pairplot(data, hue='Facies', palette=facies_color_map, hue_order=list(reversed(facies_names)))

plot_feature_stats(X, y, feature_names, facies_colors, facies_names)

Data Exploration: Facies distribution

How abundant are facies across all wells?

# workaround to increase figure size

mpl.rcParams['figure.figsize'] = (20.0, 10.0)

inline_rc = dict(mpl.rcParams)

# Data Exploration: Facies per well

for w_idx, w in enumerate(np.unique(well)):

# Define plot ID in mosaic

ax = plt.subplot(3, 4, w_idx+1)

# Define histogram settings

hist = np.histogram(y[well == w], bins=np.arange(len(facies_names)+1)+.5)

plt.bar(np.arange(len(hist[0])), hist[0], color=facies_colors, align='center')

# Cosmetic settings

ax.set_xticks(np.arange(len(hist[0])))

ax.set_xticklabels(facies_names)

ax.set_title(w)

Data Exploration: Features per well present

How complete is the data after imputing?

for w_idx, w in enumerate(np.unique(well)):

# Define plot ID in mosaic

ax = plt.subplot(3, 4, w_idx+1)

# Define histogram settings

hist = np.logical_not(np.any(np.isnan(X[well == w, :]), axis=0))

plt.bar(np.arange(len(hist)), hist, color=facies_colors, align='center')

# Cosmetic settings

ax.set_xticks(np.arange(len(hist)))

ax.set_xticklabels(feature_names)

ax.set_yticks([0, 1])

ax.set_yticklabels(['miss', 'hit'])

ax.set_title(w)

Show the first well in the training set!

make_facies_log_plot(data[data['Well Name'] == 'SHRIMPLIN'], pred_column='Facies', facies_colors=facies_colors)

Step 3: Augmentation

Augmentation is a crucial step in improving prediction quality. The process takes features and depths and calculates new features with a sliding window moving across depth.

# Feature windows concatenation function

def augment_features_window(X, N_neig):

# Parameters

N_row = X.shape[0]

N_feat = X.shape[1]

# Zero padding

X = np.vstack((np.zeros((N_neig, N_feat)), X, (np.zeros((N_neig, N_feat)))))

# Loop over windows

X_aug = np.zeros((N_row, N_feat*(2*N_neig+1)))

for r in np.arange(N_row)+N_neig:

this_row = []

for c in np.arange(-N_neig,N_neig+1):

this_row = np.hstack((this_row, X[r+c]))

X_aug[r-N_neig] = this_row

return X_aug

# Feature gradient computation function

def augment_features_gradient(X, depth):

# Compute features gradient

d_diff = np.diff(depth).reshape((-1, 1))

d_diff[d_diff==0] = 0.001

X_diff = np.diff(X, axis=0)

X_grad = X_diff / d_diff

# Compensate for last missing value

X_grad = np.concatenate((X_grad, np.zeros((1, X_grad.shape[1]))))

return X_grad

# Feature augmentation function

def augment_features(X, well, depth, N_neig=1):

# Augment features

X_aug = np.zeros((X.shape[0], X.shape[1]*(N_neig*2+2)))

for w in np.unique(well):

w_idx = np.where(well == w)[0]

X_aug_win = augment_features_window(X[w_idx, :], N_neig)

X_aug_grad = augment_features_gradient(X[w_idx, :], depth[w_idx])

X_aug[w_idx, :] = np.concatenate((X_aug_win, X_aug_grad), axis=1)

# Find padded rows

padded_rows = np.unique(np.where(X_aug[:, 0:7] == np.zeros((1, 7)))[0])

return X_aug, padded_rows

4. Train, then Predict Test Facies

The first cell contains the XGBoost classifier controlled by the Scikit-Optimize (Skopt) “BayesSearchCV” function.

There are two main differences when performing Bayesian Optimization using Skopt’s BayesSearchCV. When creating your search space you need to make each hyperparameter’s space a probability distribution as opposed to using lists like GridSearchCV. Skopt makes this easy for you with their library skopt.space which lets us import “Real”, “Integer”, and “Categorical” to create the probability distributions.

- Real: Continuous hyperparameter space.

- Integer: Discrete hyperparameter space.

- Categorical: Categorical hyperparameter space.

Below you can see examples of using both the categorical and integer functions. For categorical spaces simply input a list inside the function. For Integer spaces input the minimum and maximum values you want BayesSearchCV to explore.

The function on_step allows us to implement a form of early stopping (if needed) and also prints out the score after each iteration.

search_space = {"colsample_bylevel": Real(0.6,1),

"colsample_bytree":Real(0.6, 0.8),

"gamma": Real(0.01,5),

"learning_rate": Real(0.0001, 1),

"max_delta_step": Real(0.1, 10),

"max_depth": Integer(3, 15),

"min_child_weight": Real(1, 500),

"n_estimators": Integer(10,200),

"reg_alpha": Real(0.1,100),

"reg_lambda": (0.1, 100),

"subsample": (0.4, 1.0)

}

def on_step(optim_result):

"""

Callback meant to view scores after

each iteration while performing Bayesian

Optimization in Skopt"""

score = XGB_bayes_search.best_score_

print("best train score: {}".format(score))

# Train and test a classifier

def train_and_test(X_tr, y_tr, X_ts, well_ts):

# Feature normalization

scaler = preprocessing.RobustScaler(quantile_range=(25.0, 75.0)).fit(X_tr)

X_tr = scaler.transform(X_tr)

X_ts = scaler.transform(X_ts)

# Train classifier

clf = XGBClassifier()

global XGB_bayes_search

XGB_bayes_search = BayesSearchCV(clf, search_space, n_iter=75, scoring="f1_weighted", n_jobs=-1, cv=5)

XGB_bayes_search.fit(X_tr, y_tr, callback=on_step)

# Test classifier

y_ts_hat = XGB_bayes_search.predict(X_ts)

# Clean isolated facies for each well

for w in np.unique(well_ts):

y_ts_hat[well_ts==w] = medfilt(y_ts_hat[well_ts==w], kernel_size=5)

return y_ts_hat

The following cell brings everything together and starts the XGBoost algorithm and predicts test and train facies labels.

# Load testing data

test_data = pd.read_csv('validation_data_nofacies.csv')

# Prepare training data

X_tr = X

y_tr = y

# Augment features

X_tr, padded_rows = augment_features(X_tr, well, depth)

# Removed padded rows

X_tr = np.delete(X_tr, padded_rows, axis=0)

y_tr = np.delete(y_tr, padded_rows, axis=0)

# Prepare test data

well_ts = test_data['Well Name'].values

depth_ts = test_data['Depth'].values

X_ts = test_data[feature_names].values

# Augment features

X_ts, padded_rows = augment_features(X_ts, well_ts, depth_ts)

# Predict test labels

y_ts_hat = train_and_test(X_tr, y_tr, X_ts, well_ts)

# Add prediction to test_data for plotting

test_data['Prediction'] = y_ts_hat

# Predict train labels,

y_tr_hat = XGB_bayes_search.predict(X_tr)

# Add prediction to data for plotting

data = data.join(pd.DataFrame(y_tr_hat), how='inner')

data.rename(columns={0: "Prediction"}, inplace=True)

print("\nBest score: {}\nBest params: {}".format(XGB_bayes_search.best_score_,XGB_bayes_search.best_params_))

Hyperparameter searching can be tedious, but there are tools that can do the tedious work for you. For example, Bayesian Optimization improves accuracy similarly as a Grid Search or Randomized Search, but automatically improves accuracy within the ranges given in the search space. Note that the Bayesian Optimization starts with randomized hyperparameters. This means it can sometimes require more iterations to arrive at the best accuracy possible. In my experience, the algorithm could reach maximum after 50 iterations, sometimes after 150. Play with the iterations and avoid excessive training durations since it takes longer and longer to improve the score. There will be a point of diminishing returns.

The train F1 achieved here was 0.571.

Step 5: Evaluate

The F1 score of predicted test labels vs ground truth test labels was calculated by the organizers. Luckily, the correct test facies labels were provided in the GitHub repo after the contest concluded so we can calculate this by ourselves using the F1 scorer provided in Scikit-Learn. The F1 Scorer receives the variables y_hat_ts and y_ts_true.

# F1 score on test wells

y_ts_true = pd.read_csv('blind_stuart_crawford_core_facies.csv')

# rename columns for merging

y_ts_true.rename(columns={'Depth.ft': 'Depth', 'LithCode': 'Facies'}, inplace=True)

# remove facies "11", since that was not in training

y_ts_true = y_ts_true[y_ts_true!=11]

test_data = test_data.merge(y_ts_true, on='Depth', how='inner')

test_data.dropna(inplace=True)

print("F1_weighted: ",f1_score(test_data['Facies'], test_data['Prediction'], average='weighted'))

The test F1 achieved here was 0.42.

| Place | Team | Train F1 Score | Test F1 Score |

|---|---|---|---|

| N/A | ChristianHallerX | 0.571 | 0.478 |

| 1 | LA_Team (Mosser, de la Fuente) | ??? | 0.641 |

| 2 | ispl (Bestagini, Tuparo, Lipari) | 0.564 | 0.640 |

| 3 | SHandPR | 0.560 | 0.631 |

| 4 | HouMath | 0.563 | 0.630 |

| 5 | esaTeam | 0.566 | 0.629 |

It is unclear how the organizers calculated the Test F1. Normally, test/validation scores (unknown data) are lower than training scores but here they are higher. What is wrong here?

# Compare true and predicted facies on first TREAINING well

compare_facies_plot(data[data['Well Name'] == 'SHRIMPLIN'], pred_column='Prediction', facies_colors=facies_colors)

# Compare true and predicted facies on first TEST well

compare_facies_plot(test_data[test_data['Well Name'] == 'STUART'], pred_column='Prediction', facies_colors=facies_colors)

# Compare true and predicted facies on second TEST well

compare_facies_plot(test_data[test_data['Well Name'] == 'CRAWFORD'], pred_column='Prediction', facies_colors=facies_colors)

Conclusion

Due to the proprietary nature of many geoscience data, the data size is limited to what was provided in the SEG ML contest, which is a relatively small data set compared to what treasure troves the AI pioneers in the tech sector can dispose of. Many of the algorithms common today work bettter (generalize more reliably) with massive data sets that are much larger than the 4000 samples used here. While Scikit-Optimize and XGBoost did not dramatically improve the accuracy of the model, it does simplify the model setup and resulted in a best score for the training set F1. However, this provides a stepping stone for geoscientists to further explore and apply machine learning techniques to lithofacies prediction, while employing their domain knowledge to further enhance the speed and accuracy of predictive models. Potential applications include validating velocity models for seismic data, fault interpretation and well top interpretation.

Acknowledgements:

Thanks to Matt Hall (Agile) and Brendon Hall (Enthought) for organizing the SEG Machine Learning challenge and the Kansas Geological Survey for their data.

References

Hall, B. (2016). Facies classification using machine learning. The Leading Edge, 35(10), 906-909.

Friedman, J. H. (2000) Greedy function approximation: A gradient boosting machine: Annals of Statistics, 29, 1189–1232.